Ask the AI Expert: “How Should I Be Leveraging Machine Learning Operations (ML Ops) to Put AI in Production?”

A lot can happen in the technology industry in a few months’ time. The AI team within Zebra’s Chief Technology Office refocuses its research on a new technology or use case about every six months. Yet, the best practices derived from each research initiative seem to have an infinite shelf life, if only to help us (as humans) avoid repeating past mistakes as we push toward even better outcomes with AI and other advanced technologies. That’s why we recently connected with Andrea Mirabile, AI Director at Zebra, to find out what he shared during the “Leveraging Machine Learning Operations (ML Ops) to Put AI in Production” session at the 2022 Nvidia GPU Technology Conference (GTC). Though it has been several months since he sat down with Omri Geller, CEO and Co-Founder, Run:ai; Scott McClellan, Senior Director, Data Science, NVIDIA; and Rebecca Li, Director of Strategy, Weights & Biases for the panel, the topics they discussed are becoming more relevant each day, including…

how to manage and monitor AI models in production.

how to improve collaboration between data scientists, engineers, and IT teams.

how to ensure the quality and reliability of AI models in production.

the challenges and best practices around scaling, monitoring, and maintaining AI models in production.

These experts also provided examples of how different organizations implement ML Ops to successfully put AI models into production and explained how the commercialization of AI products is often where failure occurs – not necessarily in the R&D or testing phases.

Andrea leads the group at Zebra which focuses on advancing image, video, and multi-modal understanding for various technology platforms and for the benefit of the research community. So, he has a lot of experience with ML Ops. Here’s a rundown of our conversation:

Your Edge Blog Team: What were some of the big themes the AI developer community were focused on at the Nvidia Conference?

Andrea: The big themes included high-performance computing, edge computing, metaverse, robotics and autonomous systems, artificial intelligence (AI) and deep learning. Since I am mainly focused on AI, deep learning, and ML Ops day to day, I mainly covered the advancements I’ve seen in vision and natural language processing, especially on Large-Language Models (LLM), as well as techniques for improving the performance and interpretability of deep neural networks and applications of AI and deep learning in fields such as autonomous vehicles, healthcare, and financial services.

From an ML Ops perspective, I also shared strategies my team and I use for improving the speed, efficiency, and reliability of the machine learning development cycle and the tools and methodologies we find most helpful for automating the deployment, monitoring, and management of machine learning models. Of course, we covered best practices for scaling machine learning models to meet the demands of large-scale production environments.

Overall, the focus was to showcase the latest technological advancements and provide a platform for developers, researchers, and industry experts to exchange ideas and collaborate on new solutions in the field of AI and deep learning.

Your Edge Blog Team: What specifically did you stress to the audience? What best practices should they adopt?

Andrea: We touched on many different aspects from version control, reproducibility, continuous integration and deployment, to monitoring and logging and model versioning. We also covered two areas that are not often considered as part of ML Ops but should be embraced, which are collaboration and security and privacy.

It is so important to adopt tools and practices that encourage communication and knowledge sharing among AI development team members. This includes using shared repositories, documenting processes, and conducting regular code reviews to ensure code quality and consistency.

Developers should be aware of the security and privacy implications of deploying machine learning models. It is important to implement appropriate access controls, data anonymization techniques, and encryption measures to protect sensitive data and prevent unauthorized access.

Your Edge Blog Team: There is a lot of misunderstanding right now in the general public about AI and machine learning. So, do you find there are common misperceptions that also extend to the developer community?

Andrea: On occasion. For example, there are three beliefs about ML that I think are important to mention because they aren’t necessarily true.

First, I’ve heard people claim, “ML Ops is solely the responsibility of the data science team." The reality is that ML Ops is a collaborative effort involving data scientists, developers, operations teams, and other stakeholders. Developers should actively participate in building and maintaining the ML Ops pipeline to ensure the successful deployment and management of models.

Secondly, some say, "ML Ops is only about deploying models to production." However, that’s misleading. While deploying models is a significant aspect of ML Ops, it is not the only one. ML Ops encompasses the entire lifecycle of a machine learning model, including data pre-processing, data versioning, model training, deployment, monitoring, and retraining.

Finally, it’s wrong to believe that “ML Ops is a one-time setup." ML Ops is an ongoing process that requires continuous improvement and iteration. Developers should regularly evaluate and optimize their ML Ops pipeline to adapt to changing requirements, emerging technologies, and evolving best practices.

Your Edge Blog Team: What is the role of ML Ops in research and design (R&D) at Zebra?

Andrea: Generally speaking, ML Ops is the practice of combining software development best practices with machine learning in order to put AI models into production. It aims to improve collaboration between data scientists, engineers, and IT teams, and to automate the process of deploying, scaling, and maintaining AI models in production environments.

Specifically at Zebra, ML Ops helps us ensure that the models we create are deployed, monitored, and maintained in a way that is similar to software development. The goal is to ensure that the models are always working as expected for customers, providing high accuracy results, and able to be updated as needed with minimal or no downtime.

Your Edge Blog Team: Where do you primarily focus ML Ops in Zebra R&D?

Andrea: There are five areas we find ML Ops practices most helpful:

Automation: Automating the process of model development, testing, deployment and monitoring.

Scalability: Enabling the ability to scale models up or down based on demand.

Governance: Ensuring models are compliant with regulatory requirements and meet the standards set by both Zebra and our customers.

Monitoring: Continuously monitoring the model's performance, accuracy and data drift.

Managing the lifecycle: Managing the end-to-end life cycle of the model and providing transparency to stakeholders.

The correct implementation of ML Ops also helps us to improve the speed, quality, and reliability of our AI models in production, automate the deployment process to minimise downtime and errors, continuously monitor the models for performance and accuracy, and incorporate feedback and updates to improve the models over time.

Your Edge Blog Team: What specific problems is the CTO AI research team trying to solve, and for who?

Andrea: Right now, we are trying to solve various challenges that retailers face in operating their stores, such as engaging associates, optimizing inventory and elevating the customer experience.

Computer vision applications can play a key role in improving the customer experience at checkout. For example, computer vision can be used for queue management to ensure shorter wait times, as part of self-checkout systems that reduce friction and make the checkout process faster and more convenient, as well as for fraud detection to ensure secure transactions.

In addition to supporting a better customer experience, these types of applications help retailers improve their operational efficiency and better manage their inventory. Some of the areas in which we are focusing our R&D include:

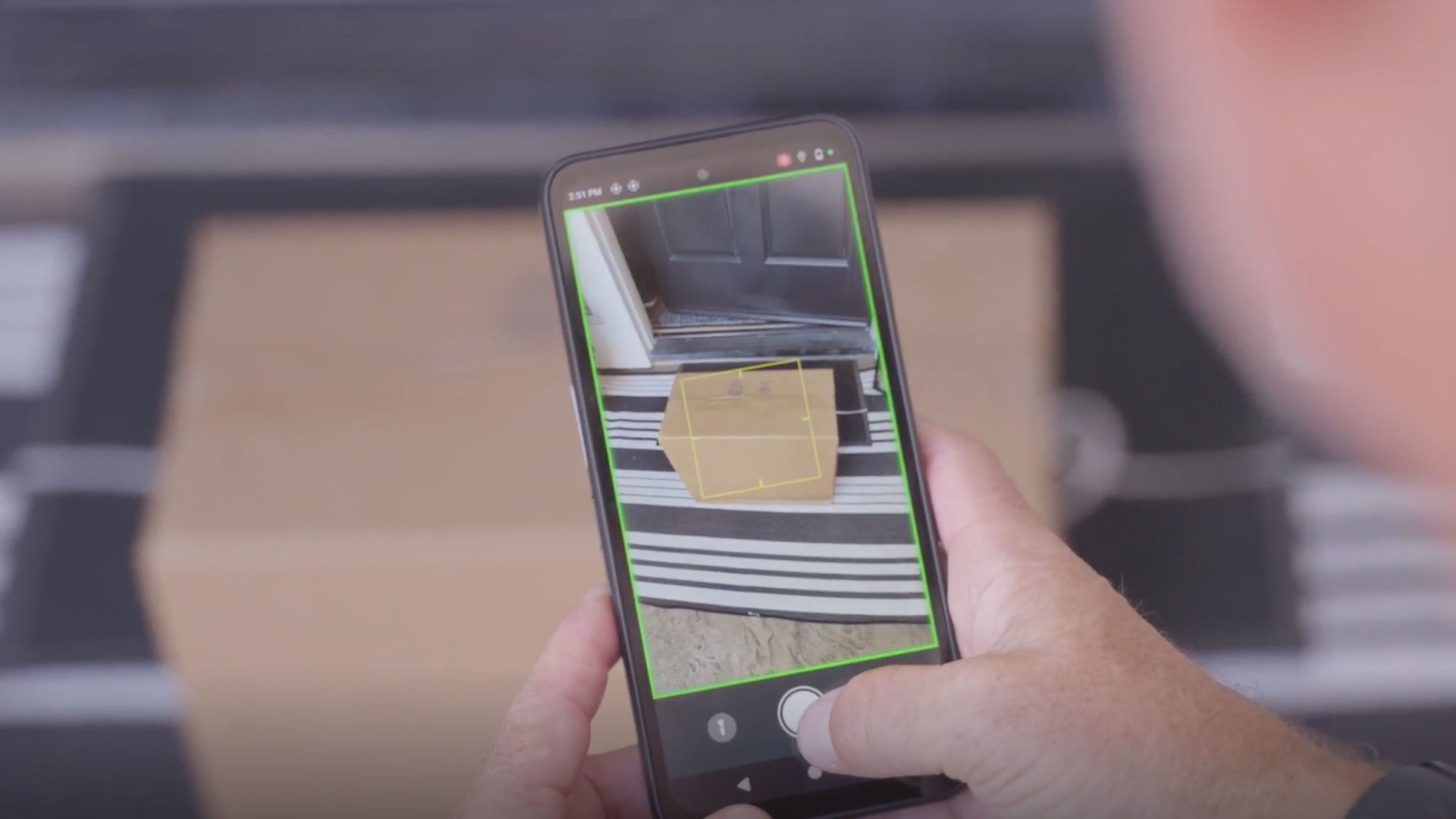

Inventory tracking: By helping retailers track inventory levels in real time, we can help reduce the likelihood they’ll experience stockouts or overstocking. By using cameras to scan barcodes or QR codes, retailers can keep track of stock levels, product movements and locations, and more.

Automated shelf-monitoring: Retailers can use computer vision to monitor shelves to ensure items are properly stocked and priced. This can help retailers quickly identify out-of-stock items, pricing errors, and other issues that could impact the customer experience and result in losses.

Improved picking accuracy: Retailers can give workers computer vision-powered wearable devices or handheld devices to guide them through the picking process. This can reduce picking errors, minimize the time needed to complete tasks, and improve overall productivity.

Streamlined returns processing: Retailers can automate the returns process by using cameras to scan barcodes or QR codes. This can help speed up the returns process, reduce errors and increase the accuracy of the inventory data.

Your Edge Blog Team: You mentioned that you shared strategies at the Nvidia Conference for improving the speed, efficiency, and reliability of the machine learning development cycle. Can you go into detail about a few of them for our readers?

Andrea: Building efficient data pipelines can enhance the speed and reliability of the development cycle. A data pipeline is a series of interconnected steps and processes that transform raw data into a usable format for machine learning tasks. It involves collecting, ingesting, pre-processing, and transforming data, as well as feeding it into machine learning models for training or inference. Data pipelines automate these steps, ensuring consistency, reproducibility, and scalability in data processing workflows.

Your Edge Blog Team: Which tools and methodologies do you find most helpful for automating the deployment, monitoring, and management of machine learning models?

Andrea: It is important to note that not all tools fit every need or use case in the deployment, monitoring, and management of machine learning models. Depending on the specific requirements, infrastructure, and constraints, it may be necessary to build custom or in-house tools to address specific challenges.

As highlighted in the conference, our current approach also involves using Run:ai, which utilizes Kubernetes and Dockers to automate and orchestrate AI workloads. We complement this with Weight & Biases to monitor and track experiments, foster collaboration, and facilitate knowledge sharing within the team.

Your Edge Blog Team: What’s the best way to ensure the quality and reliability of AI models in production?

Andrea: Models are not perfect! Models can degrade over time due to changing data patterns or environmental factors. Continuous monitoring is essential to identify performance issues and ensure that models are performing as expected.

Your Edge Blog Team: What challenges have you encountered when scaling, monitoring, and maintaining AI models in production? And how did you get past them?

Andrea: One of the challenges faced when scaling and maintaining AI models in production is managing models across different edge devices, which often have varying update mechanisms. We are currently working on standardizing deployment frameworks to ensure consistent and efficient model updates across our product portfolio.

Your Edge Blog Team: How do you and others on the Zebra CTO team engage with the broader AI developer community? And why you engage with others outside Zebra?

Andrea: We recognize the importance of fostering a strong relationship with the AI developer community. We believe that collaboration and engagement with students, universities, start-ups, and organizations within the AI ecosystem are essential to advancing the state of AI technology, especially the adaptive AI used to inject intelligence into customers’ business operations and support automation across their workflows and decision processes. Therefore, we use various ways to engage with the AI developer community and demonstrate our commitment to this important field.

One of our recent efforts was the London Innovation Expo, which brought together researchers, practitioners, and experts from the industry to share their knowledge and experience. The Expo provided a platform for AI community members to interact with the industrial world and explore new solutions and products. The event was a great success, with attendees praising the opportunity to network and share their ideas with other members of the community.

In addition to hosting events like the London Innovation Expo, we also encourage our researchers to attend and participate in important conferences, contribute to workshops, and participate in AI research projects. I believe these activities help us build strong relationships with other AI community members and support the development of AI technology. Overall, our commitment to engaging with members of the AI community is a crucial part of our mission to advance the state of AI technology.

###

Read This Next:

The Truth About AI: What Mainstream Media Coverage is Missing

###

You May Also Be Interested in These Perspectives:

Zebra’s “Your Edge” Blog Team

The “Your Edge” Blog Team is comprised of content curators and editors from Zebra’s Global PR, Thought Leadership and Advocacy team. Our goal is to connect you with the industry experts best-versed on the issues, trends and solutions that impact your business. We will collectively deliver critical news analysis, exclusive insights on the state of your industry, and guidance on how your organization can leverage a number of different proven technology platforms and strategies to capture your edge.