What to Know Before You Try to Train (and Test) a Deep Learning Application

AI must fail over and over to succeed. But there are several mistakes you want to avoid making when teaching an AI-driven application how to look for anomalies on a product, component, or label.

If you read my colleague Jim Witherspoon’s primer on deep learning optical character recognition (OCR), then you heard that the way deep learning works isn’t much different than a human’s learning process.

Like your brain, deep learning tools utilize a mesh of artificial neural networks that requires training to become ‘more intelligent’. The main difference is that, unlike artificial intelligence (AI), your brain’s neural networks have been learning for years – you’ve literally been training them on hundreds of thousands of images every single day without even noticing it. That’s why your brain can easily ‘spot the difference’ between two pictures fairly quickly if asked, even if you don’t know what the object you’re looking at really is.

However, an AI-driven application – once trained properly – will spot the differences much faster. Of course, there are still some limitations to this technology. But compared to a few years back, deep learning models do not require as much data as before to become reliable. Moreover, if something runs too slow, you can always upgrade your PC hardware to train and execute the deep learning models faster. That’s a huge advantage, seeing that we cannot (yet) upgrade the human brain comparably. That’s why AI, machine learning, and deep learning are so exciting. If trained properly, this technology can do what we do and help us solve different problems, but so much faster and with more accuracy.

Proper training is key for a successful deep learning application, though. I can’t stress it more that if you don’t use the right tactics to teach the AI the difference between ‘right and wrong’, it will fail to grasp the concepts you’re trying to instill. (Just like a person.)

So, I thought I could share the things my colleagues and I have seen people of all experience and skill levels get wrong when trying to train or test a deep learning application in real-world scenarios like production, fulfillment, sorting, packing, and quality control. Of course, I also want to talk about the right way to train a deep learning model and, subsequently, evaluate the effectiveness of that training. However, before we get to that, let’s focus on the basics and discuss what you need to effectively train your deep learning model.

Step One: Prepare your Datasets (and Know What Kind of Data to Use for ‘Training’ vs. ‘Test’)

You can’t train a deep learning model if you aren’t using the right type of data for training, and I often see people trying to use the same datasets for training and testing purposes, which is a problem.

For a deep learning model to work effectively, it has to be exposed to a set of data in order to learn what we require from it. The set of training data used during the training process is called the ‘training dataset’ whereas another, completely different set, used to test the model is called the ‘test dataset’. There may also be a ‘validation dataset’ sometimes, which is used during the training for validation but not for training. But to keep things simple, let’s just stick to ‘training’ and ‘test’ datasets for now.

If we compare an AI-driven application to a high-school student, we could say that the training dataset is like all the learning materials used in class (where each image would be equal to one book or article approaching the same topic from a slightly different angle) and the test dataset is like the end-of-term exam, during which we test if the student has learned all the knowledge and can put it into practice to solve the problems at hand.

The first golden rule when preparing your datasets is NEVER TO MIX training and test datasets. The reason behind this is very simple: If you’re testing a deep learning model using the exact same dataset it has already seen during the training, how can you be sure if it can apply what it has learned in a completely new situation (for example when analyzing new images)? Well… you can’t. And, more often than not, it won’t be able to. You can’t test the model using training images for the very same reason teachers don’t give their students the test questions ahead of time. They want to see if they can apply the lessons taught in class to other situations – they want to see what they derived and retained from their training.

Another rule is that you need diversity in your images. Your deep learning model, in order to learn effectively, needs to be fed with many variations of the same feature. Let’s say that you want to analyze defects on a bolt’s thread. You can’t just take one bolt with a defective thread and take, let’s say, 20 or 50 images of the same item, just with some minor changes to the illumination or the orientation angle. That is cheating – and forbidden. The deep learning model needs to be able to recognize many different anomalies, which may include the wrong part or component coming down the line. So, whenever you are training a deep learning model, you must prepare a training dataset (e.g. images) of 20-50 different – I mean truly different – objects. In your case, this would mean 20-50 bolts with similar but different defects of the thread.

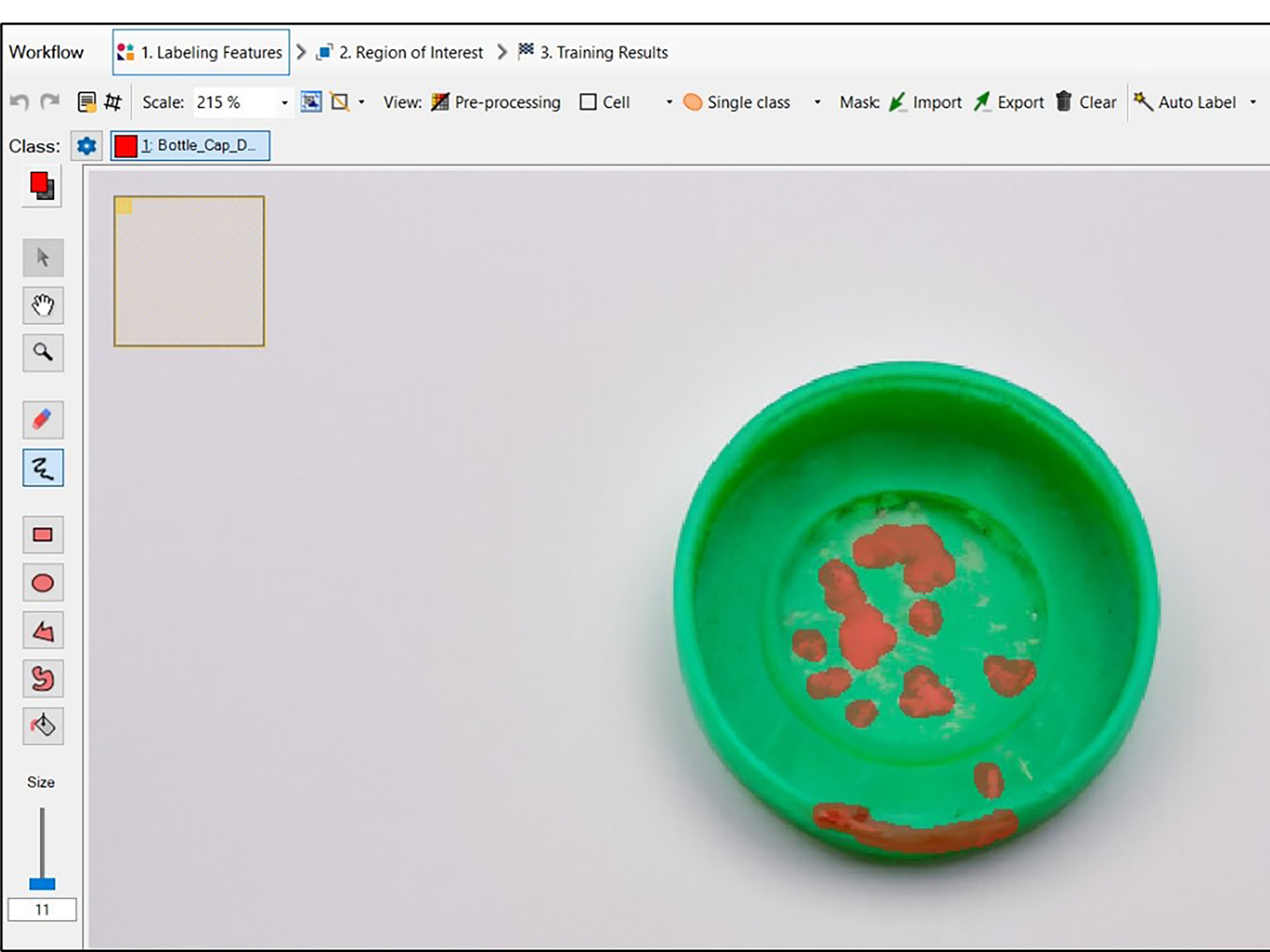

Caption: An example of a bad training dataset. The same defect (wood knot) was captured a couple of time, with only its rotation or positioning slightly changed. To teach a deep learning model to identify such defect, training dataset must include images of many different types of wood knots.

The last rule is: Don’t try to train on defects located at the edge of the image. This is a tricky problem for deep learning models because the accuracy of neural networks is usually lower at the edges due to the smaller context. Although people can still see things quite well at the edge of an image (or at least they think they can), it is recommended that the field of view in real applications assures some margin around objects under inspection.

So, to sum things up, in order to effectively train a deep learning model, you have to:

- Prepare two different datasets, one for training and training only and another one for testing your model.

Make sure that the training dataset does not contain any data (e.g. images) which is preset in the test dataset.

Prepare a varied training and test dataset containing truly different objects (e.g. different pieces of the same product) with the same type of feature (e.g. defect) which you want to teach the model to recognize.

Don’t use images where defects are located at the edges of the image for training.

You must also get your annotations right – and know when it’s best not to annotate something.

The Art of Annotation

There are many types of deep learning tools. Some can be trained in an unsupervised mode (e.g. anomaly detection) in which you just provide the network with a set of images without marking anything on them, whereas other tools require some sort of annotation to teach the neural network what you want it to look for. In a case where annotations are required, you need to remember that the annotations you make on your training dataset will influence the AI’s ability to learn (and retain) the right lesson.

The golden rule here is simple – do not mix your annotations. If you mark one defect type on some images in the dataset and another defect type on other images and then submit all of them as the same training data set, it will essentially teach the AI a very bad habit. The neural network does not extrapolate from examples. It looks at the markings on the image, and those markings must be very precise so the AI can be taught with very clear training patterns.

Let’s say you want to detect scratches on a plastic surface, but some samples also have chipped-off corners. You must either mark the scratches, leaving the damaged corners without any annotations (and thus not teaching the AI that this is some sort of defect) OR train a model that can recognize two different classes of defects: scratches and damaged corners. In the second scenario, you must mark all the scratches as Defect One and all chipped corners as Defect Two. This way, the deep learning model, if trained properly, will learn to recognize both defects and will be able to differentiate between them. However, it will never work if you just mark all the different defects within the image as one class and then use these images for training.

Caption: An example of an incorrect defect annotation. There are clearly two defects on the cap, which are not separated into two different classes but instead marked as one defect.

Contrary to our brain, deep learning models are not able to recognize ‘a defect’ in its general sense. We humans can do that because, as mentioned before, we’ve been teaching our brains to spot things every single day for years. Deep learning models can, however, identify defects like scratches or chipped-off elements perfectly and much faster than us providing that we teach them this ‘skill’ properly.

There are, of course, exceptions to this rule (e.g. anomaly detection where you can teach the model using good samples and the model will detect any deviations from the learned pattern). But if your model is trained in a supervised mode to recognize a particular thing, then you need to make sure that you feed it the right data and get your annotations right.

So, are there any rules or tricks here?

Of course there are:

Use the right annotation tools that can help you tackle the problem at hand. In some cases, using the brush tool is best; in other scenarios, a bounding box is more convenient and appropriate. For example, if you want the deep learning model to detect bananas, and you create oriented bounding boxes, remember that the two ends of a banana are different. So, you must account for the potential 180-degree rotation. If you want it to detect oranges, though, you must change the way you mark the image. Oranges do not have orientation, so a non-rotating bounding box is more appropriate. Scratches or small defects like chips will work best with free-hand style annotation tools, like the brush.

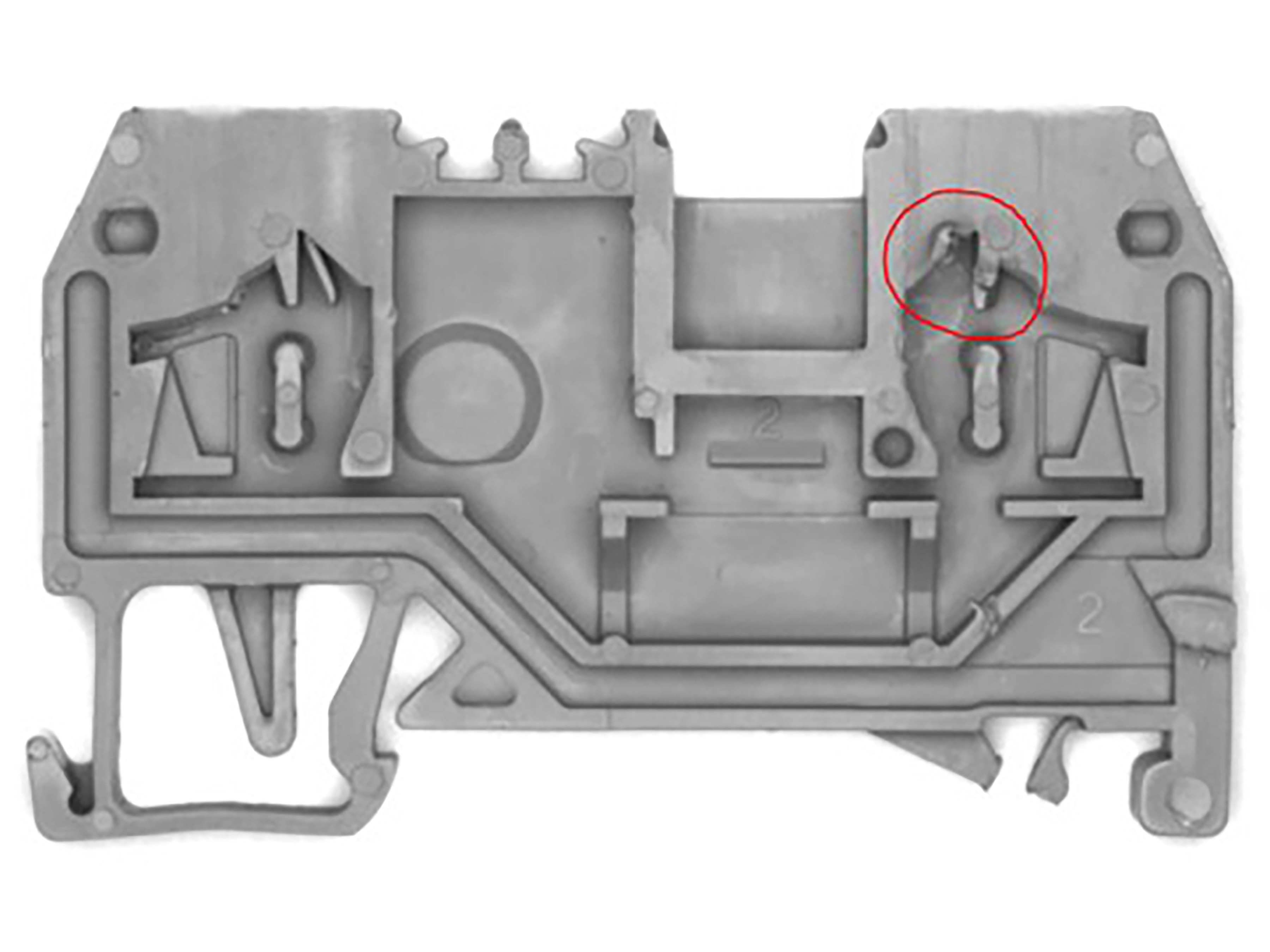

Keep your images clean and, whatever you do, please do not mark up images with arrows or circles to show the location of defects. Such samples have zero value for training (or testing) as the neural network will see the markings and will make judgments using them. Don’t think about artificially removing these parts in Photoshop, either. The neural network will detect this too. If someone sends you images with defect markings, the best thing you can do is to ask for clean images that can be used during training.

Caption: An image with a defect marking

If you are prepping your datasets for training and want a second opinion on their quality or you’re experiencing some issues during training and need assistance, feel free to contact our Zebra Aurora Vision Support Team at avdlsupport@zebra.com.

And if you want to find out what we’ve learned about testing a deep learning application, stay tuned to the blog because I’ll share best practices and common mistakes in my next post.

###

Related Resources

- A Deeper Dive on Deep Learning OCR

- Deep Learning Isn’t a “Bleeding Edge” Technology, but It Can Help Stop the Bleed at the Edge of Your Business. Here’s How.

- Ask the Expert: Is There a Difference Between Machine Learning and Deep Learning?